Kaleb S. NewmanI'm a first-year Ph.D. student in Computer Science at Princeton University, where I work in the Visual AI Lab advised by Dr. Olga Russakovsky. My research interests lie broadly in computer vision, with a particular focus on how machines and humans internally reason about and represent the visual world. I received my Sc.B. in Computer Science from Brown University in 2025, focused in Artificial Intelligence and Visual Computing. At Brown, I was fortunate to be advised by Dr. Chen Sun and Dr. Tomas Serre, and I also spent a summer at the University of Rochester working with Dr. Zhen Bai. Please feel free to reach out to chat about research or advice! I'm always interested in discussing computer vision research, machine learning, artificial intelligence, and academic opportunities. |

|

Research

My research interests lie broadly in computer vision, with a particular focus on how machines and humans internally reason about and represent the visual world. |

News

|

|

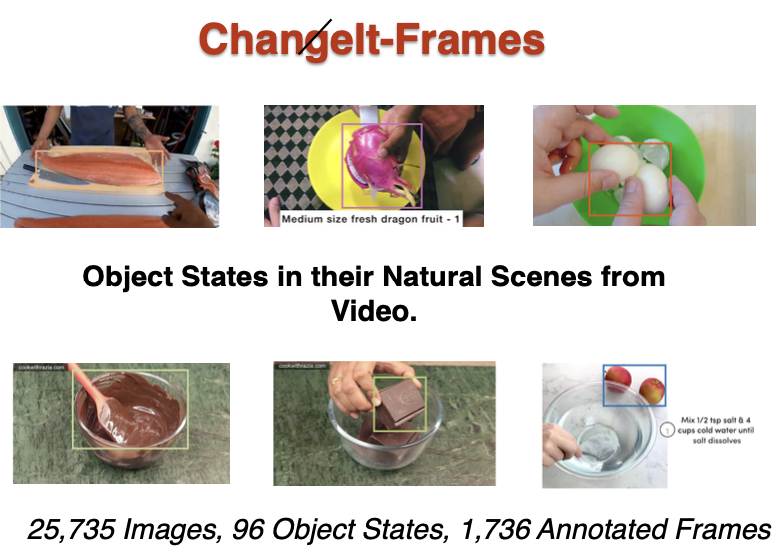

Do Pre-Trained Vision-Language Models Encode Object States?

K. Newman*, Shijie Wang, Yuan Zang, David Heffren, Chen Sun ECCV 2024 Workshop on Emergent Visual Abilities and Limits of Foundation Models arXiv We investigate whether pre-trained vision-language models encode information about object states, finding that they are skilled at recognizing objects but struggle with distinguishing their physical states. |

|

Leveraging Usefulness and Autonomy: Designing AI-Mediated ASL Communication Between Hearing Parents and Deaf Children

Yifan Li, Hecong Wang, Ekram Hossain, Madeleine Mann, Jingyan Yu, Kaleb Slater Newman, Ashley Bao, Athena Willis, Chigusa Kurumada, Wyatte C Hall, Zhen Bai Proceedings of the 24th Interaction Design and Children, 512-526 paper We explore the design of AI-mediated ASL communication technologies to support parent-child interactions between hearing parents and deaf children. |

|

|

Supporting ASL Communication Between Hearing Parents and Deaf Children

E. Houssain, K. Newman, A. Bao, M. Mann, Y. Li, H. Wang, W. Hall, C. Kurumada, Z. Bai ACM SIGACCESS Conference on Computers and Accessibility paper We present a system to support ASL communication between hearing parents and deaf children, addressing accessibility challenges in family communication. |

|

|

Building User-Centered ASL Communication Technologies for Parent-Child Interactions

A. Bao*, K. Newman*, M. Mann, E. Houssain, C. Kurumada, Z. Bai MIT Undergraduate Research & Technology Conference 2023 (Top 5 paper distinction) paper We present a user-centered approach to developing ASL communication technologies, focusing on the needs of hearing parents and deaf children. |

|

Shoutout to Jon Barron for this website template! |